Alejandro Flores

Ph.D. in Cheminformatics; Machine Learning; Data Visualization

- Bern, Switzerland

- Google Scholar

- ORCID

- Github

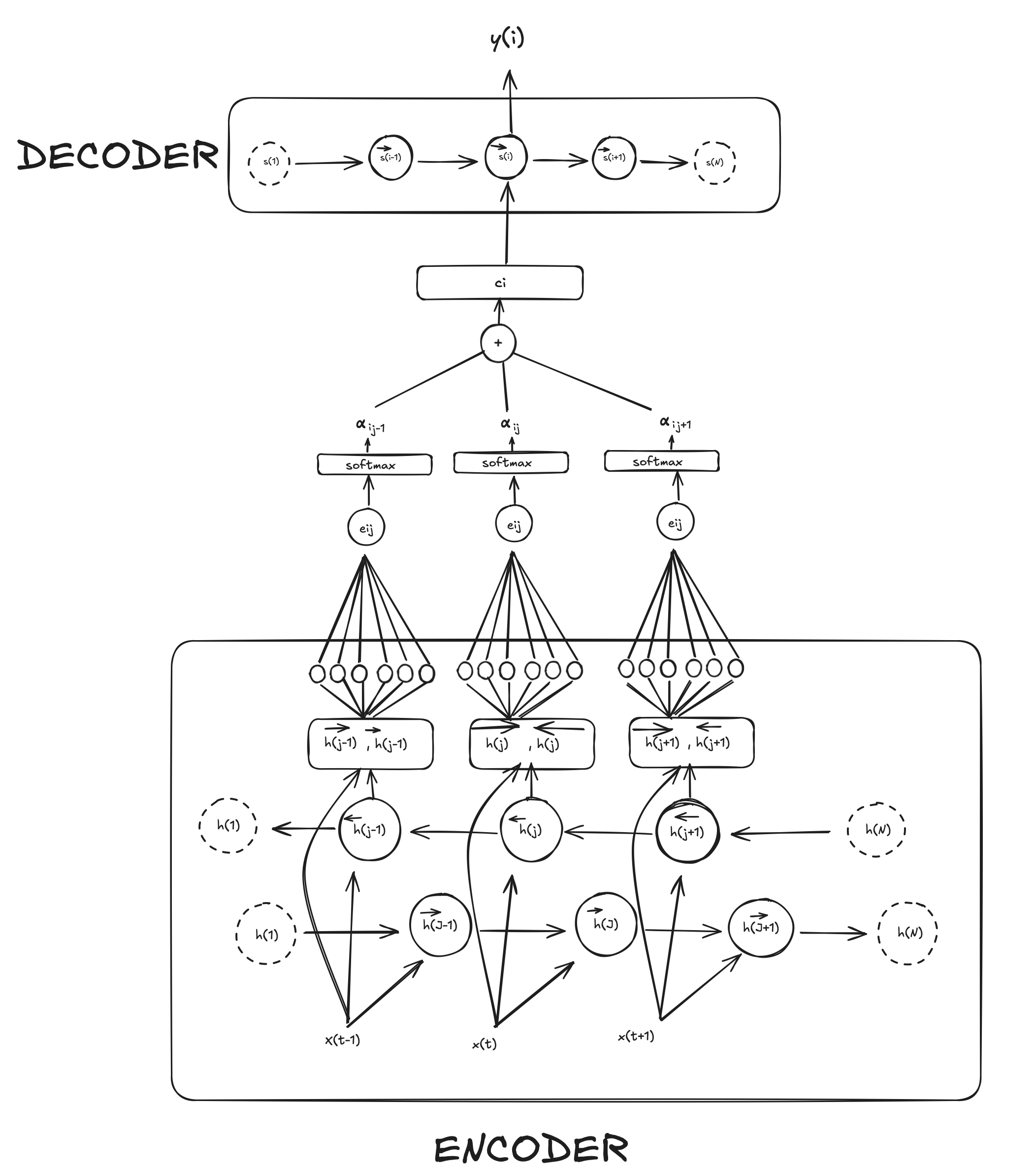

Neural Machine Translation By Jointly Learning to Align and Translate Explained

7 minute read

Published:

Layer Normalization

2 minute read

Published:

Layer Normalization is a technique in deep learning that addresses the covariate shift problem by normalizing thanks to the mean and variance calculated across features for each example.

Batch Normalization

3 minute read

Published:

Batch normalization has become an essential deep learning technique. Its purpose is to improve speed and add stability in the learning process by normalizing the value distribution before going into the next layer. This helps reducing the covariate shift

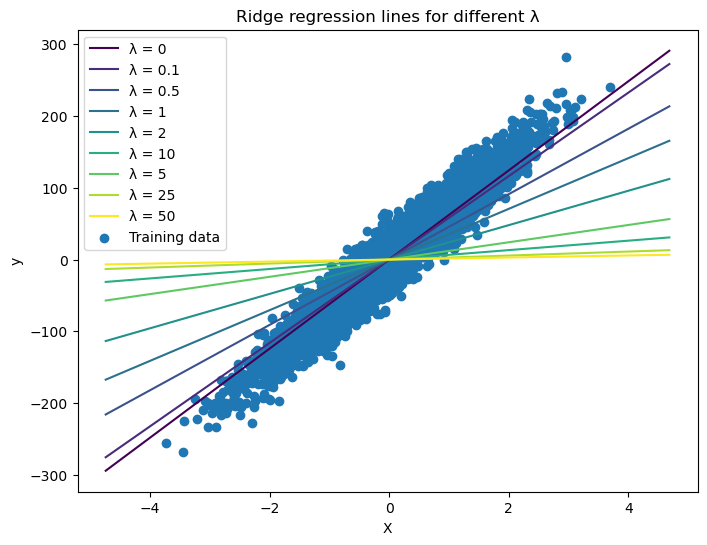

Ridge Regression from Scratch

3 minute read

Published:

Comments